Demystifying Data Science and Unlocking its Power

Welcome to our blogpost, where we embark on a journey to demystify the fascinating world of data science. In today's data-driven era, understanding the power of data science is more important than ever. From uncovering hidden patterns to making informed decisions, data science has become a game-changer in various industries.

But what exactly is data science? At its core, data science involves extracting valuable insights from data through a combination of statistical analysis, machine learning, and data visualization techniques. It goes beyond simply collecting information and delves into uncovering meaningful patterns and trends that can drive business growth and innovation.

The demand for data scientists has soared in recent years, as organizations recognize the potential of leveraging data to gain a competitive edge. These professionals possess a unique blend of technical skills, domain knowledge, and analytical thinking, allowing them to navigate complex datasets and extract valuable insights.

The purpose of this blogpost is to provide you with a comprehensive guide to data science. Whether you're a beginner curious about the field or an experienced professional looking to expand your knowledge, we've got you covered. We'll explore the fundamental concepts, the data science workflow, essential tools and technologies, key techniques and algorithms, ethical considerations, real-world applications, challenges, and future directions.

So, let's dive into the world of data science together and unravel its mysteries. By the end of this blogpost, you'll have a solid understanding of data science and its incredible potential to transform the way we work, live, and make decisions. Are you ready? Let's get started!

I. Understanding the Fundamentals of Data Science

Alright, let's begin our exploration of the fundamental concepts of data science. So, what exactly is data science?

Data science is a multidisciplinary field that combines mathematics, statistics, computer science, and domain knowledge to extract insights and knowledge from data. It involves a series of steps and techniques to collect, clean, analyze, and visualize data in order to uncover meaningful patterns and trends.

To give you a clearer picture, let's break down the key components of data science:

1. Data collection and preprocessing: The first step is to gather relevant data from various sources such as databases, APIs, or even manual collection. Once collected, the data needs to be cleaned and preprocessed to remove any inconsistencies, errors, or missing values. This ensures that the data is in a suitable format for analysis.

2. Exploratory data analysis (EDA): In this stage, data scientists dive deep into the dataset to gain insights and understand its characteristics. They use descriptive statistics, data visualization techniques, and exploratory techniques to uncover patterns, correlations, and anomalies. EDA helps to identify trends, outliers, and potential relationships within the data.

3. Statistical modeling and machine learning: After gaining a thorough understanding of the data, data scientists utilize statistical modeling and machine learning algorithms to build predictive or descriptive models. These models are trained on historical data and can be used to make predictions, classify data, or uncover hidden patterns. The choice of algorithms depends on the problem at hand and the nature of the data.

4. Data visualization and communication: Once the analysis is complete, data scientists employ data visualization techniques to present their findings in a visually appealing and easily understandable manner. Visualizations such as charts, graphs, and interactive dashboards help stakeholders comprehend complex information and make informed decisions based on the insights derived from the data.

By following this data science workflow, professionals can unlock the power of data and transform it into valuable insights that drive business decisions, optimize processes, and solve real-world problems.

In the next sections, we'll delve deeper into each step of the workflow, exploring various techniques, tools, and algorithms used along the way. So, stay tuned as we unravel the secrets of data science and equip you with the knowledge to embark on your own data-driven journey.

II. The Data Science Workflow

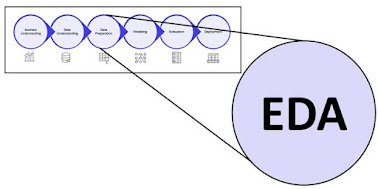

Now that we have a good grasp of the fundamental concepts, let's take a closer look at the data science workflow. This workflow serves as a roadmap for data scientists to navigate through the various stages of a data science project. Let's break it down step by step:

1. Problem formulation and understanding the business context: The first and most crucial step is to clearly define the problem at hand. Data scientists need to understand the business objectives, ask the right questions, and identify how data science can contribute to solving the problem. This involves collaborating with stakeholders to define the project goals, scope, and success criteria.

2. Data collection and cleaning: Once the problem is defined, the next step is to gather the necessary data. This may involve extracting data from databases, APIs, web scraping, or working with pre-existing datasets. The collected data often requires cleaning, which includes handling missing values, removing duplicates, and addressing any inconsistencies or outliers. Data cleaning ensures that the data is reliable and accurate for subsequent analysis.

3. Exploratory data analysis (EDA): With clean data in hand, data scientists perform exploratory data analysis to gain insights and understand the characteristics of the dataset. This involves summarizing the data using descriptive statistics, visualizing the data through charts and graphs, and exploring relationships between variables. EDA helps in identifying patterns, trends, and potential issues in the data.

4. Feature engineering and selection: In this step, data scientists transform the raw data into meaningful features that can be used by machine learning algorithms. Feature engineering involves creating new features, combining existing ones, and scaling or normalizing the data. Feature selection focuses on identifying the most relevant features that have the most impact on the target variable, reducing dimensionality and improving model performance.

5. Model building and evaluation: Here comes the exciting part—building predictive or descriptive models using various machine learning algorithms. Data scientists train these models on historical data and then evaluate their performance using appropriate evaluation metrics. This step involves splitting the data into training and testing sets, tuning hyperparameters, and applying cross-validation techniques to ensure robustness.

6. Model deployment and productionisation: Once a satisfactory model is developed, it's time to deploy it into a production environment where it can make predictions or generate insights in real-time. This may involve integrating the model into an application, setting up APIs, or designing workflows for automated data processing. Data scientists collaborate with software engineers and IT teams to ensure a smooth deployment process.

7. Monitoring and maintenance: Models are not a one-time endeavor—they need to be monitored and maintained over time. Data scientists need to continuously track model performance, retrain models as new data becomes available, and address any drift or degradation in performance. Monitoring helps to ensure that the model remains accurate and reliable, and adjustments can be made if needed.

By following this data science workflow, data scientists can effectively tackle complex projects, extract valuable insights, and deliver actionable solutions to real-world problems. In the next sections, we'll dive deeper into the specific techniques, tools, and technologies utilized within each step of the workflow. So, let's continue our data science journey together and uncover the secrets behind each stage.

III. Essential Tools and Technologies in Data Science

Now that we have a solid understanding of the data science workflow, let's explore the essential tools and technologies that power this field. From programming languages to data manipulation tools, a data scientist's toolkit is filled with resources to extract insights from data. Let's take a closer look at some of these key tools:

1. Programming languages:

● Python: Python is widely regarded as the go-to language for data science. It offers a rich ecosystem of libraries and frameworks such as NumPy, Pandas, and scikit-learn, which provide powerful tools for data manipulation, analysis, and machine learning.

● R: R is another popular programming language specifically designed for statistical analysis and data visualization. It offers a wide range of packages and libraries, making it a preferred choice for statisticians and researchers.

2. Data manipulation and analysis tools:

● SQL (Structured Query Language): SQL is a programming language used to manage and manipulate relational databases. It enables data scientists to query databases, extract specific data subsets, and perform aggregation operations for analysis.

● Apache Spark: Apache Spark is a distributed computing framework that enables processing large-scale data in parallel. It provides libraries for data manipulation, querying, and machine learning, making it ideal for big data analytics.

3. Machine learning frameworks and libraries:

● Scikit-learn: Scikit-learn is a powerful and user-friendly machine learning library for Python. It offers a wide range of algorithms for classification, regression, clustering, and more. It also provides tools for model evaluation and selection.

● TensorFlow: TensorFlow is an open-source machine learning framework developed by Google. It is particularly popular for deep learning tasks and enables the creation of neural networks for complex data analysis.

● PyTorch: PyTorch is another widely used deep learning framework that provides dynamic computational graphs, making it easy to build and train neural networks. It is known for its flexibility and efficient GPU utilization.

4. Data visualization tools:

● Matplotlib: Matplotlib is a popular data visualization library for Python. It provides a wide range of customizable plots and charts, allowing data scientists to create visually appealing visualizations.

● Tableau: Tableau is a powerful data visualization tool that offers an intuitive interface for creating interactive dashboards and reports. It enables data exploration and storytelling through visually engaging visualizations.

● Power BI: Power BI is a business intelligence tool by Microsoft that allows data scientists to create interactive dashboards, reports, and visualizations. It offers seamless integration with other Microsoft products and cloud services.

These are just a few examples of the tools and technologies available in the data science landscape. As the field continues to evolve, new tools and frameworks emerge, offering innovative ways to analyze and derive insights from data. The choice of tools depends on specific project requirements, personal preferences, and the data science ecosystem within an organization.

In the next sections, we'll explore the key techniques and algorithms used in data science, enabling you to dive deeper into the analytical aspects of this fascinating field. So, let's continue our data science journey and unlock the secrets behind these techniques together.

IV. Key Techniques and Algorithms in Data Science

Now that we have covered the essential tools and technologies in data science, let's delve into the key techniques and algorithms that drive data analysis and modeling. These techniques form the backbone of data science and provide the means to extract valuable insights and make predictions from data. Let's explore some of the fundamental techniques:

1. Supervised learning algorithms:

● Linear regression: Linear regression is a widely used technique for modeling the relationship between a dependent variable and one or more independent variables. It is used for predicting continuous numerical values.

● Logistic regression: Logistic regression is used for binary classification problems, where the target variable has two possible outcomes. It predicts the probability of an event occurring.

● Decision trees and random forests: Decision trees divide data into hierarchical segments based on different features, making them suitable for both classification and regression tasks. Random forests combine multiple decision trees to improve accuracy and reduce overfitting.

● Support Vector Machines (SVM): SVM is a powerful algorithm for both classification and regression tasks. It finds an optimal hyperplane that separates data into different classes or predicts continuous values.

2. Unsupervised learning algorithms:

● Clustering techniques: Clustering algorithms, such as K-means and hierarchical clustering, group similar data points together based on their characteristics, allowing data scientists to identify natural patterns and structures within the data.

● Principal Component Analysis (PCA): PCA is a dimensionality reduction technique used to transform high-dimensional data into a lower-dimensional space. It helps to identify the most important features or components that capture the most variation in the data.

● Association rule mining: Association rule mining, exemplified by the Apriori algorithm, discovers relationships and patterns in large datasets, particularly in market basket analysis and recommendation systems.

● Anomaly detection: Anomaly detection algorithms identify unusual or rare data points that deviate significantly from the normal pattern. These algorithms are vital in fraud detection, network security, and outlier detection tasks.

3. Deep learning and neural networks:

● Convolutional Neural Networks (CNN): CNNs are primarily used in computer vision tasks, such as image classification and object detection. They leverage the hierarchical structure of data and convolutional layers to extract relevant features automatically.

● Recurrent Neural Networks (RNN): RNNs are designed for sequential data, such as time series and natural language processing. They have memory capabilities that enable them to capture dependencies and patterns over time.

● Generative Adversarial Networks (GAN): GANs consist of two neural networks—a generator and a discriminator—that compete against each other. GANs are used for generating new data samples, image synthesis, and data augmentation.

These are just a few examples of the techniques and algorithms used in data science. Depending on the nature of the problem and the data, data scientists may employ a combination of these techniques or explore more advanced algorithms to derive insights and make accurate predictions.

In the upcoming sections, we'll explore the ethical considerations in data science, real-world applications, challenges, and future directions. So, stay with us as we continue our journey through the exciting landscape of data science.

V. Ethical Considerations in Data Science

As data science continues to evolve and impact various aspects of our lives, it is crucial to address the ethical considerations associated with its practice. The power and potential of data science come with great responsibility, and data scientists must be mindful of the ethical implications of their work. Let's explore some key considerations:

1. Privacy and data protection: Data scientists often work with sensitive and personal data. It is vital to ensure that data privacy and protection regulations are adhered to throughout the entire data lifecycle. Anonymization techniques, secure data storage, and informed consent are some measures to safeguard individuals' privacy.

2. Fairness and bias: Bias in data can lead to unfair outcomes and discriminatory practices. Data scientists should be vigilant in identifying and mitigating bias in both the data itself and the models they develop. Regularly assessing and monitoring the fairness of algorithms is necessary to avoid perpetuating inequalities.

3. Transparency and interpretability: As data-driven decision-making becomes more prevalent, it is essential to ensure transparency in the decision-making process. Data scientists should strive to make their models and algorithms interpretable and provide clear explanations of how predictions or recommendations are made. This promotes accountability and allows stakeholders to understand and question the results.

4. Data governance and accountability: Establishing clear guidelines and protocols for data governance is crucial. Data scientists should be aware of their responsibilities in handling data, ensuring data quality, and maintaining data security. Having proper mechanisms for accountability and audits can help prevent misuse or unauthorized access to data.

5. Ethical use of AI and automation: As data science intersects with artificial intelligence and automation, ethical considerations become even more important. Ensuring that AI systems are designed to prioritize human well-being, mitigate harm, and respect ethical guidelines is paramount. Regular monitoring and testing of AI systems can help identify and rectify any unintended consequences.

6. Social impact and bias in algorithmic decision-making: Algorithms can influence important decisions in areas such as hiring, lending, and criminal justice. It is crucial to address the potential biases and unintended consequences that may arise from algorithmic decision-making. Regularly evaluating the impact of these decisions on different communities and ensuring fairness is vital.

By integrating ethical considerations into the data science process, we can build responsible and inclusive practices that align with societal values. Ethical frameworks, guidelines, and ongoing discussions within the data science community can contribute to the development of responsible data science practices.

In the following sections, we'll explore real-world applications of data science, the challenges faced by data scientists, and the future directions of this rapidly evolving field. So, stay tuned as we continue our data science journey and uncover more insights together.

VI. Real-World Applications of Data Science

Data science has permeated various industries and is revolutionizing the way businesses operate and make decisions. Let's explore some of the real-world applications where data science is making a significant impact:

1. Healthcare: Data science is transforming healthcare by enabling personalized medicine, predicting disease outbreaks, and optimizing treatment plans. It helps analyze large datasets to identify risk factors, develop predictive models for diagnosis, and improve patient outcomes through data-driven insights.

2. Finance and Banking: In the financial sector, data science is used for fraud detection, credit scoring, algorithmic trading, and risk assessment. It helps financial institutions make informed decisions, manage portfolios, and detect anomalies that could indicate fraudulent activities.

3. Retail and E-commerce: Data science plays a crucial role in understanding customer behavior, optimizing pricing strategies, and predicting demand. Recommender systems leverage data to provide personalized product recommendations, enhancing the customer experience and driving sales.

4. Transportation and Logistics: Data science is employed in optimizing supply chains, route planning, and fleet management. It helps reduce delivery times, improve fuel efficiency, and enhance logistics operations through data-driven decision-making.

5. Energy and Utilities: Data science is used in energy forecasting, load optimization, and predictive maintenance of equipment. It helps energy companies optimize resource allocation, reduce costs, and improve efficiency in energy production and distribution.

6. Social Media and Marketing: Data science enables targeted advertising, sentiment analysis, and social media analytics. It helps businesses understand customer preferences, identify trends, and tailor marketing campaigns for maximum impact.

7. Environmental Science: Data science aids in climate modeling, predicting natural disasters, and analyzing environmental data. It helps scientists understand complex ecological systems, monitor air and water quality, and make data-driven policy recommendations for sustainable development.

These are just a few examples of the wide-ranging applications of data science across various industries. The versatility and power of data science allow businesses and organizations to harness the potential of their data and gain a competitive edge in today's data-driven world.

In the next section, we'll discuss the challenges that data scientists encounter and the skills required to excel in this field. So, let's continue our exploration of data science and uncover the intricacies that lie ahead.

VII. Challenges and Skills in Data Science

While data science offers immense opportunities, it also presents challenges that data scientists must overcome. Let's discuss some common challenges faced in this field and the skills required to excel as a data scientist:

1. Data Quality and Data Acquisition: One of the primary challenges is accessing high-quality data. Data scientists often encounter incomplete, inconsistent, or noisy data, which can affect the accuracy and reliability of their analysis. Skills in data cleaning, data preprocessing, and data integration are crucial for addressing these challenges.

2. Scalability and Big Data: With the exponential growth of data, handling large datasets poses scalability challenges. Data scientists need to be proficient in big data technologies, such as Apache Hadoop and Apache Spark, to efficiently process and analyze massive amounts of data.

3. Algorithm Selection and Model Performance: Choosing the right algorithms and models for a given task is critical. Data scientists must have a deep understanding of various algorithms and their strengths and limitations. Evaluating and fine-tuning models to achieve optimal performance requires strong analytical skills and domain expertise.

4. Interpretability and Explainability: As machine learning models become more complex, interpretability becomes crucial. Data scientists need to be able to explain how their models make predictions or decisions, especially in regulated domains like finance and healthcare. Skills in model interpretability techniques, such as feature importance analysis and model explanations, are essential.

5. Ethical and Legal Considerations: Data scientists face ethical dilemmas and legal constraints in handling sensitive data, ensuring fairness, and addressing biases. Understanding privacy regulations, ethical guidelines, and staying updated on the evolving legal landscape is vital to navigate these challenges responsibly.

6. Continuous Learning and Adaptability: Data science is a rapidly evolving field with new techniques, tools, and technologies emerging regularly. Data scientists must have a strong desire for continuous learning and adaptability to stay current with the latest advancements and industry best practices.

In addition to these challenges, data scientists require a broad set of skills to excel in their roles. Some key skills include:

● Programming and Data Manipulation: Proficiency in programming languages like Python or R is essential for data manipulation, analysis, and model development.

● Statistical Knowledge: A solid foundation in statistics helps in understanding data distributions, hypothesis testing, and statistical modeling.

● Machine Learning and Data Mining: Knowledge of various machine learning algorithms, data mining techniques, and their applications is crucial for building predictive models.

● Data Visualization: The ability to effectively communicate insights through data visualization is important. Skills in using tools like Matplotlib, Tableau, or Power BI can aid in creating informative visual representations.

● Communication and Collaboration: Data scientists need to effectively communicate their findings to stakeholders, collaborate with cross-functional teams, and translate technical concepts into actionable insights.

By recognizing these challenges and developing the necessary skills, data scientists can navigate the complexities of the field and unlock the true potential of data science.

In the final section, we'll explore the future directions of data science and the exciting possibilities that lie ahead. So, let's continue our data science journey and get a glimpse of what the future holds.

VIII. The Future of Data Science

Data science is a dynamic field that continues to evolve rapidly, driven by advancements in technology, increasing data availability, and growing demand for data-driven decision-making. Let's explore some of the exciting future directions and possibilities in data science:

1. Artificial Intelligence (AI) Integration: The integration of data science with AI will unlock new possibilities for automation, predictive analytics, and intelligent decision-making. AI-powered systems will become more sophisticated, enabling autonomous data analysis, natural language processing, and advanced cognitive capabilities.

2. Explainable AI and Responsible Data Science: As AI becomes more prevalent, the need for explainable and transparent AI systems will grow. Researchers and practitioners are focusing on developing techniques to make AI models more interpretable and accountable, addressing the ethical concerns associated with algorithmic decision-making.

3. Augmented Analytics: Augmented analytics combines machine learning, natural language processing, and data visualization to enhance human decision-making. It empowers business users and domain experts to explore and analyze data independently, reducing reliance on data scientists for routine tasks.

4. Edge Computing and Internet of Things (IoT): With the proliferation of IoT devices and sensors, data science will increasingly move to the edge, enabling real-time data analysis and decision-making. Edge computing reduces latency, improves data privacy, and enables more efficient use of network resources.

5. Deep Learning Advancements: Deep learning, a subset of machine learning focused on neural networks, will continue to advance. Researchers will explore novel architectures, training techniques, and optimization methods to tackle more complex tasks and improve the performance of deep learning models.

6. Interdisciplinary Collaboration: Data science is an inherently interdisciplinary field. The future will see increased collaboration between data scientists, domain experts, social scientists, and policymakers to address complex societal challenges and leverage the potential of data science across various domains.

7. Continuous Learning and Upskilling: To keep pace with the evolving landscape, data scientists will need to embrace continuous learning and upskill themselves. Lifelong learning, attending conferences, participating in online courses, and staying updated with the latest research will be crucial for staying at the forefront of the field.

As the future unfolds, data science will continue to shape industries, drive innovation, and provide valuable insights for decision-making. The possibilities are vast, and the potential for positive impact is tremendous.

Final Thoughts

In conclusion, data science is an exciting and rapidly evolving field that has the potential to revolutionize industries, solve complex problems, and drive innovation. From extracting valuable insights from data to building predictive models and making data-driven decisions, data science has become an indispensable tool for businesses and organizations.

Throughout this blog post, we explored the fundamental concepts of data science, including the tools and technologies used, key techniques and algorithms, ethical considerations, real-world applications, challenges, and future directions. We highlighted the importance of data quality, algorithm selection, interpretability, and ethical practices in data science.

As you delve into the world of data science, remember that it is a multidisciplinary field that requires a combination of technical expertise, analytical skills, and domain knowledge. Embrace continuous learning and stay updated with the latest advancements in the field to stay at the forefront of data science.

Whether you're a seasoned data scientist or just beginning your data science journey, the potential for exploration, discovery, and making a positive impact is immense. So, embrace the power of data, leverage the tools and techniques at your disposal, and strive to contribute to the advancement of data science and its applications.

Thank you for joining us on this data science adventure. May your data-driven endeavors be fruitful, insightful, and transformative. Happy data science exploration!

THANK YOU FOR READING!!

FOLLOW FOR MORE INTERESTING CONTENT

MUST READ

- THE MAGIC OF DRONES AND THEIR TECHNOLOGY

- IS MATERIAL SCIENCE FUTURE OF TECH?

- CYBERSECURITY-KEY TO SAFEGUARD YOUR DATA

- REVEALING TRUTH BEHIND DEEP-FAKE

- THE IMPACT OF SPACE TECHNOLOGY

- UNCOVERING MYSTERIES OF IoT

- EXPLORING DEPTHS OF AUGMENTED REALITY

- DISCOVERING WONDERS OF VIRTUAL REALITY

- AUTOMATION-THE ROBOTIC PROCESS

- WHAT IS QUANTUM COMPUTING?

- ALL YOU NEED TO KNOW ABOUT MACHINE LEARNING

- HOW ARTIFICIAL INTELLIGENCE IS CHANGING WORLD

- A CLOSER LOOK TO GRAPHENE

- TRANSFORMATION OF GLOBAL SATELLITES

- EVERYTHING ABOUT ROCKETS AND MISSILES

- SNAPCHAT-MY AI NEW UPDATE

.jpg)